Top 9 tanh function in neural network in 2022

Below are the best information and knowledge on the subject tanh function in neural network compiled and compiled by our own team evbn:

Mục Lục

1. Activation Functions in Neural Networks [12 Types & Use Cases]

Author: www.v7labs.com

Date Submitted: 02/08/2019 02:44 PM

Average star voting: 3 ⭐ ( 70239 reviews)

Summary: A neural network activation function is a function that is applied to the output of a neuron. Learn about different types of activation functions and how they work.

Match with the search results: the larger the input (more positive), the closer the output value will be to 1.0, whereas the smaller the input (more negative), the closer the output will be to -1.0…. read more

![Activation Functions in Neural Networks [12 Types & Use Cases]](https://assets-global.website-files.com/5d7b77b063a9066d83e1209c/627d12431fbd5e61913b7423_60be4975a399c635d06ea853_hero_image_activation_func_dark.png)

2. Activation Functions in Neural Networks

Author: towardsdatascience.com

Date Submitted: 05/14/2021 03:25 PM

Average star voting: 5 ⭐ ( 45306 reviews)

Summary: As you can see the function is a line or linear. Therefore, the output of the functions will not be confined between any range. It doesn’t help with the complexity or various parameters of usual data…

Match with the search results: The tanh function is mainly used classification between two classes. Both tanh and logistic sigmoid activation functions are used in feed- ……. read more

3. 6 Types of Activation Function in Neural Networks You Need to Know | upGrad blog

Author: www.upgrad.com

Date Submitted: 12/13/2021 08:51 AM

Average star voting: 3 ⭐ ( 34858 reviews)

Summary: If you are a deep learning developer, you must have heard about the importance of ANNs and activation functions. Check out this guide on 6 types of activation functions in neural networks.

Match with the search results: … various activation functions in artificial neural networks…. read more

4. Activation Functions: Sigmoid vs Tanh | Baeldung on Computer Science

Author: paperswithcode.com

Date Submitted: 11/10/2021 03:00 AM

Average star voting: 4 ⭐ ( 76863 reviews)

Summary: Explore two activation functions, the tanh and the sigmoid.

Match with the search results: The biggest advantage of the tanh function is that it produces a zero-centered output, thereby supporting the backpropagation process. The tanh ……. read more

5. Activation Functions | What are Activation Functions

Author: www.baeldung.com

Date Submitted: 09/20/2021 03:22 PM

Average star voting: 5 ⭐ ( 67626 reviews)

Summary: Activation functions are functions used in a neural network to compute the weighted sum of inputs and biases and then activate a neuron

Match with the search results: Tanh Activation is an activation function used for neural networks: $$f\left(x\right) = \frac{e^{x} – e^{-x}}{e^{x} + e^{-x}}$$ Historically, the tanh ……. read more

6. Activation Functions in Deep Learning: Sigmoid, tanh, ReLU – KI Tutorials

Author: insideaiml.com

Date Submitted: 12/01/2020 05:45 PM

Average star voting: 3 ⭐ ( 88299 reviews)

Summary: Sigmoid, Tanh or ReLU? In this article we go into detail about the most important activation functions in Deep Learning and clarify when exactly which activation function must be used when implementing neuronal networks.

Match with the search results: We observe that the gradient of tanh is four times greater than the gradient of the sigmoid function. This means that using the tanh activation ……. read more

7. Activation functions in Neural Networks – GeeksforGeeks

Author: www.analyticsvidhya.com

Date Submitted: 04/07/2019 03:10 AM

Average star voting: 5 ⭐ ( 63516 reviews)

Summary: A Computer Science portal for geeks. It contains well written, well thought and well explained computer science and programming articles, quizzes and practice/competitive programming/company interview Questions.

Match with the search results: Tanh is quite similar to the Y=X function in the vicinity of the origin. When the value of the activation function is low, the matrix operation can be directly ……. read more

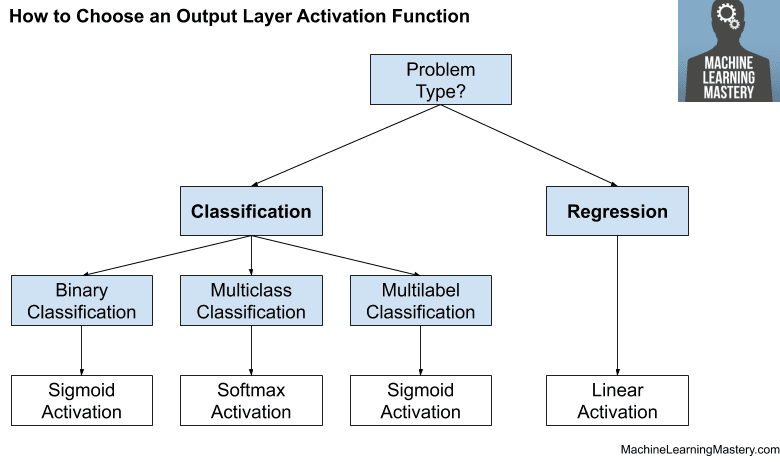

8. How to Choose an Activation Function for Deep Learning – MachineLearningMastery.com

Author: artemoppermann.com

Date Submitted: 11/10/2020 04:21 AM

Average star voting: 5 ⭐ ( 54486 reviews)

Summary:

Match with the search results: The tanh function is just another possible function that can be used as a non-linear activation function between layers of a neural network….. read more

9. Why tanh outperforms sigmoid | Medium

Author: www.geeksforgeeks.org

Date Submitted: 03/03/2020 02:46 AM

Average star voting: 3 ⭐ ( 21654 reviews)

Summary: Oldest and most popular activation functions are tanh and sigmoid. Experimenting with these on MLP gives you very interesting and shocking results.

Match with the search results: As with the sigmoid function, neurons saturate at large negative and positive values, and the derivative of the function approaches zero (blue ……. read more

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)