Re-imagining Integration Patterns with AWS Native Services

The acceleration of your business’ digital aspirations depends on fast, connected and reliable data flows. This need will be amplified in the post-COVID era where the transition to digital customer channels, business models and remote working is expected to accelerate.

In the first of this two-part series, we explored the value proposition of a cloud-native approach to enabling integration and how this overcomes the cost, time and operational inertia of implementing these using traditional approaches. In part two, we will demonstrate the adoption of three native AWS services – Amazon SQS, Amazon SNS and Amazon Kinesis Data Streams – to enable point-to-point messaging, publish-subscribe messaging and real-time streaming.

Cloud-Native Integration Solutions in Action

The original Enterprise Integration Patterns were published in 2003 by Gregor Hohpe and Bobby Wolf to help architects tackle complex integration challenges in the enterprise using proven, composable and reusable patterns. Whilst the technology landscape has changed drastically since that time, evolving from point-to-point and pub-sub messaging to real-time event stream processing, the foundational patterns have proven to be durable.

As highlighted in part one of this series, AWS, as a platform-of-best-fit, allows business stakeholders, architects and builders to compose the right patterns with the right tools and to accelerate the delivery of their innovations and business value.

SCENARIO 1: POINT TO POINT, ASYNCHRONOUS MESSAGING

Testament to the timeless nature of the EAI patterns, the simple point-to-point messaging scenario of getting information from A to B continues to be relevant in enterprises, large and small.

Business Use Cases

- One-way data push from one source system to one destination system

- Event notification from one microservice to another microservice

- Request-response data exchange between two applications or application components (SaaS, on-premise or microservice)

Enterprise Application Integration Patterns

A point-to-point solution typically involves:

- A Point-to-Point Channel with ordered messaging

- Polling Consumers that makes calls to the channel when it is ready to consume a message

- A Dead Letter Channel to collect messages that cannot be delivered

AWS

AWS

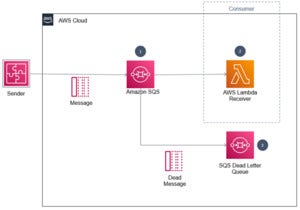

Figure 2: A point-to-point messaging integration with a dead letter channel handling messages that cannot be delivered. Diagram courtesy of Enterprise Integration Patterns – Dead Letter Channel

AWS Implementation

A common architecture to implement this pattern in AWS involves:

- Point-to-Point Channel implemented using Amazon SQS (Simple Queue Service) with the option of using FIFO queue if sequence preservation is needed.

- Polling Consumer (and dead letter queue handler) using AWS Lambda serverless functions

- Dead Letter Channel using the SQS Dead Letter Queues

AWS

AWS

Figure 3: A point-to-point messaging integration with a dead letter channel implemented with native AWS services

AWS alternatives to Amazon SQS include:

- Amazon MQ, a managed message broker service for Apache ActiveMQ and RabbitMQ that is useful for customers who are already investment in that technology suite

- AppFlow, a fully managed integration service for securely transferring data between Software-as-a-Service (SaaS) applications like Salesforce, Slack and ServiceNow, and AWS services like Amazon S3 and Amazon Redshift

Key Business Benefits

- Full parity with the enterprise integration pattern and applicability of existing team skills and knowledge

- Eliminate the undifferentiated heavy lifting of managing legacy message brokers by taking advantage of the serverless architecture of SQS (no infrastructure or software to provision, configure, patch, scale or backup)

- Amazon SQS is offered with an SLA of 99.9%

- Pay-as-you-pricing of $0.40 per million requests to SQS (with a generous free tier of 1 million requests per month)

- Security posture that includes data encryption at rest and in-flight, PCI-DSS certification and HIPAA eligibility

- Virtually unlimited throughput and number of messages, with single-digit millisecond latency

- Fully automated and repeatable architecture using CloudFormation infrastructure as code

For more details see Amazon Simple Queue Service Documentation

SCENARIO 2: PUBLISH-SUBSCRIBE, ASYNCHRONOUS MESSAGING

Similar to radio broadcast, the idea behind publish-subscribe, or commonly called pub-sub, is to send information once and have it received by multiple subscribers listening to a “topic”. Unlike point-to-point messaging where consumers poll for messages, this pattern features the ability to push messages to interested receivers (subscribers) so that they may process and act upon the data immediately. This is the crux of an “event-driven” architecture.

Business Use Cases

- One-way event notification from an event producer to multiple consumers

- Publication of data from a source to multiple interested targets

Enterprise Application Integration Patterns:

A publish-subscribe pattern typically involves:

- A Publish-Subscribe Channel featuring at least one message producer and multiple consumers

- An Event-Driven Consumer that automatically receives messages as they arrive on the channel

AWS

AWS Implementation

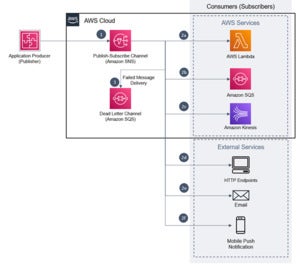

A common architecture to implement this pattern in AWS involves:

- Publish-Subscribe Channel using Amazon SNS (Simple Notification Service) with support for FIFO Pub/Sub message if sequence preservation is needed.

- Multiple Event-Driven Consumer endpoints including AWS services (such as Lambda, SQS and Kinesis) and external services (such as HTTP endpoints, email and mobile push notification)

- Optional Dead Letter Channel using Amazon SQS (Simple Queue Service) for messages that fail delivery

AWS

AWS

Figure 5: An AWS-native publish-subscribe messaging architecture with multiple AWS and non-AWS receivers

Amazon EventBridge is a serverless event bus and can be used as an alternative to SNS for publish-subscribe scenarios. There are 3 main points of differentiation between the two:

- EventBridge provides out-of-the-box integration with SaaS applications, such as Salesforce, Slack, New Relic, Data Dog, Shopify and Pager Duty

- EventBridge enables archival and replay of events

- EventBridge has a schema registry feature and is capable of discovering OpenAPI schema of events routed through the bus

Key Business Benefits

- Serverless and fully managed messaging broker that mitigates the undifferentiated heavy lifting of managing infrastructure, software, capacity, backups and availability

- Commitment-free, pay-as-you-pricing of $0.50 per million SNS requests (with a free tier of 1 million requests per month) plus delivery charges based on endpoints (such as $0.60 per million HTTP endpoints or $0.50 per million mobile push notifications)

- High throughput and elastically scalable, supporting virtually unlimited number of messages per second across up to 100,000 topics and 12.5M subscription per topic

- Conforms with established enterprise integration pattern and enables transfer of existing skills

- Data durability provided by redundant storage of messages across multiple, geographically distributed facilities and integration with SQS for dead-letter channel capability

- Security posture that includes data encryption at rest and in-flight, private message flow (using VPC endpoints) and compliance with major regimes including HIPAA, FedRamp, PCI-DSS, SOC and IRAP

For more details see Amazon Simple Notification Service Documentation

SCENARIO 3: LOW-LATENCY DATA STREAMING

Data and events generated in modern systems lose value over time. The earlier an insight can be derived in order to inform a decision or course of action, then the sooner organisations can respond, intervene, and act in order to preempt or correct an outcome.

Business Use Cases:

Applications of data streaming technology are broad and varied. They include:

- Detection of fraudulent financial activities and transactions

- Live status and telemetry readings from connected (IoT) devices and sensors for digital twin applications

- Analysis of website clickstream (user behavior) data for personalization and recommendation

- Processing of equipment and asset health indicators to enable preventative maintenance

Enterprise Application Integration Patterns:

Data streaming can be seen as a modern evolution of the Message Bus pattern, specialised for high-volume, time-sensitive data delivery. It enables a customer to ingest, process and analyse large volumes of high velocity data from a variety of sources concurrently and in real time.

A data streaming solution is unique in that it combines messaging, storage and processing of events all in one place:

- Data streams overcome high concurrency by distributing messages into partitions, or shards, which are effectively lightweight Publish-Subscribe Channels that can be consumed by multiple consumers.

- Data stream storage allows consumers to go back in time and “resume” or “replay” events that they have missed using a single source of truth, which can be useful for reconstructing state, enabling temporal data analysis and event sourcing applications.

- A Competing Consumers pattern allows consumers to read from partitions concurrently and independently whilst keep an offset to track their progress.

- Real-time processing of event enables as they are ingested can be used to accelerate analysis and insights of data as it arrives

AWS

AWS

Figure 6: A Message Bus pattern forms the basis of a modern low latency data streaming platform. Diagram courtesy of Enterprise Integration Patterns – Message Bus

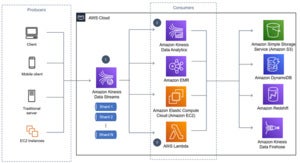

AWS Implementation:

A common architecture to implement this pattern in AWS involves:

- Amazon Kinesis Data Streams, which caters for data stream ingestion and distribution into multiple partitions called shards.

- Kinesis shards support both push and pull consumer semantics and enable message order preservation.

- Amazon Kinesis Data Analytics can be used for in-flight processing of the data payload.

AWS

AWS

Figure 7 Real-time event streaming using Amazon Kinesis, a native AWS stream ingestion and storage service.

AWS alternatives to Amazon Kinesis Data Streams include:

- Amazon MSK (Managed Streaming for Apache Kafka) is a service for customers who are invested in the Apache Kafka ecosystem and tools and wish to continue using it, but who want to offload the undifferentiated heavy lifting of managing Kafka clusters. MSK makes it easier for customers to build and run production applications on Apache Kafka without needing infrastructure and cluster management expertise

- Kinesis Firehose is an adjacent capability that can supplement or replace Kinesis Data Streams where there is some tolerance for throughput latency (Firehose will buffer messages based on configurable buffer size or interval, with a minimum of 1MB or 60 seconds) or where data needs to be streamed to select targets such as S3, Redshift, Splunk or ElasticSearch and the native integration between Firehose and these services is a value-add accelerant

Business Benefits:

- Managed service with high availability, strong data durability and simple administration. The undifferentiated heavy lifting of provisioning infrastructure, installing software, performing backups or managing availability is avoided

- Simple, elastically resizable capacity with zero downtime

- Pay-as-you-go pricing of $0.014 for up to 1 million events (payload size conditions apply) plus $0.015 per hour of data ingestion (at a rate of 1MB/second)

- Amazon Kinesis offers an SLA of 99.9%

- Extensive integration options (APIs, SDKs, client libraries and agents) as well deep, native integration with a large number of AWS services

- In-order data persistence (up to 365 days) allowing message replay and event sourcing architectures

- Fully automated and repeatable architecture using CloudFormation infrastructure as code

- Verified compliance with SOC, PCI, FedRAMP and HIPAA regimes

For more details see Amazon Kinesis Documentation.

Conclusion

Integration is diverse, complex and business-critical. Given the wide spectrum of potential uses cases, from simple intra-organizational file transfers to real-time telemetry ingestion and processing from IoT sensors, a feature-rich integration is a business imperative. Traditional platforms-of-all-fit cannot evolve and refresh fast enough to keep pace with the rate of technology innovation. This is the reason that AWS, with its breadth and depth of functionality and a rate of innovation second to none, is the platform-of-best-fit for connected businesses of this generation.

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)