Multi-layer Perceptron in TensorFlow – Javatpoint

← prev

Multi-layer Perceptron in TensorFlow

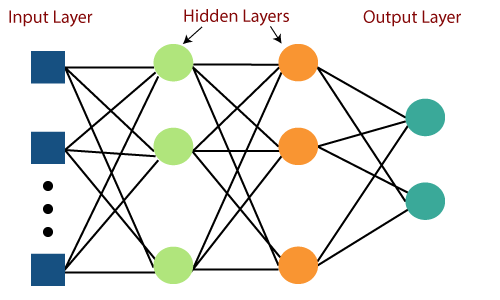

Multi-Layer perceptron defines the most complex architecture of artificial neural networks. It is substantially formed from multiple layers of the perceptron. TensorFlow is a very popular deep learning framework released by, and this notebook will guide to build a neural network with this library. If we want to understand what is a Multi-layer perceptron, we have to develop a multi-layer perceptron from scratch using Numpy.

The pictorial representation of multi-layer perceptron learning is as shown below-

MLP networks are used for supervised learning format. A typical learning algorithm for MLP networks is also called back propagation’s algorithm.

A multilayer perceptron (MLP) is a feed forward artificial neural network that generates a set of outputs from a set of inputs. An MLP is characterized by several layers of input nodes connected as a directed graph between the input nodes connected as a directed graph between the input and output layers. MLP uses backpropagation for training the network. MLP is a deep learning method.

Now, we are focusing on the implementation with MLP for an image classification problem.

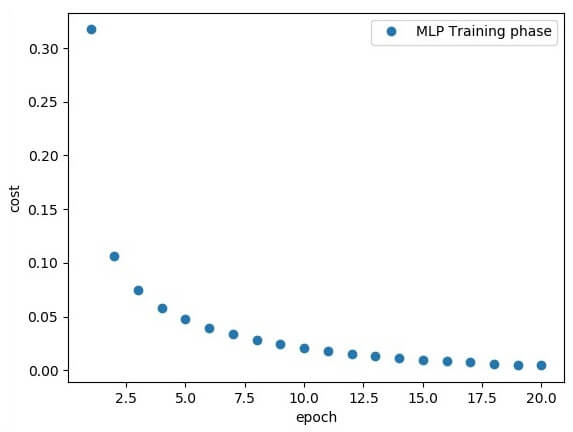

The above line of codes generating the following output-

Creating an interactive section

We have two basic options when using TensorFlow to run our code:

- Build graphs and run sessions [Do all the set-up and then execute a session to implement a session to evaluate tensors and run operations].

- Create our coding and run on the fly.

For this first part, we will use the interactive session that is more suitable for an environment like Jupiter notebook.

Creating placeholders

It’s a best practice to create placeholder before variable assignments when using TensorFlow. Here we’ll create placeholders to inputs (“Xs”) and outputs (“Ys”).

Placeholder “X”: Represent the ‘space’ allocated input or the images.

- Each input has 784 pixels distributed by a 28 width x 28 height matrix.

- The ‘shape’ argument defines the tensor size by its dimensions.

Next Topic

What is Machine Learning

← prev

next →

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)