How Kubernetes works under the hood with Docker Desktop | Docker

Docker Desktop makes developing applications for Kubernetes easy. It provides a smooth Kubernetes setup experience by hiding the complexity of the installation and wiring with the host. Developers can focus entirely on their work rather than dealing with the Kubernetes setup details.

This blog post covers development use cases and what happens under the hood for each one of them. We analyze how Kubernetes is set up to facilitate the deployment of applications, whether they are built locally or not, and the ease of access to deployed applications.

Mục Lục

1. Kubernetes setup

Kubernetes can be enabled from the Kubernetes settings panel as shown below.

Checking the Enable Kubernetes box and then pressing Apply & Restart triggers the installation of a single-node Kubernetes cluster. This is all a developer needs to do.

What exactly is happening under the hood?

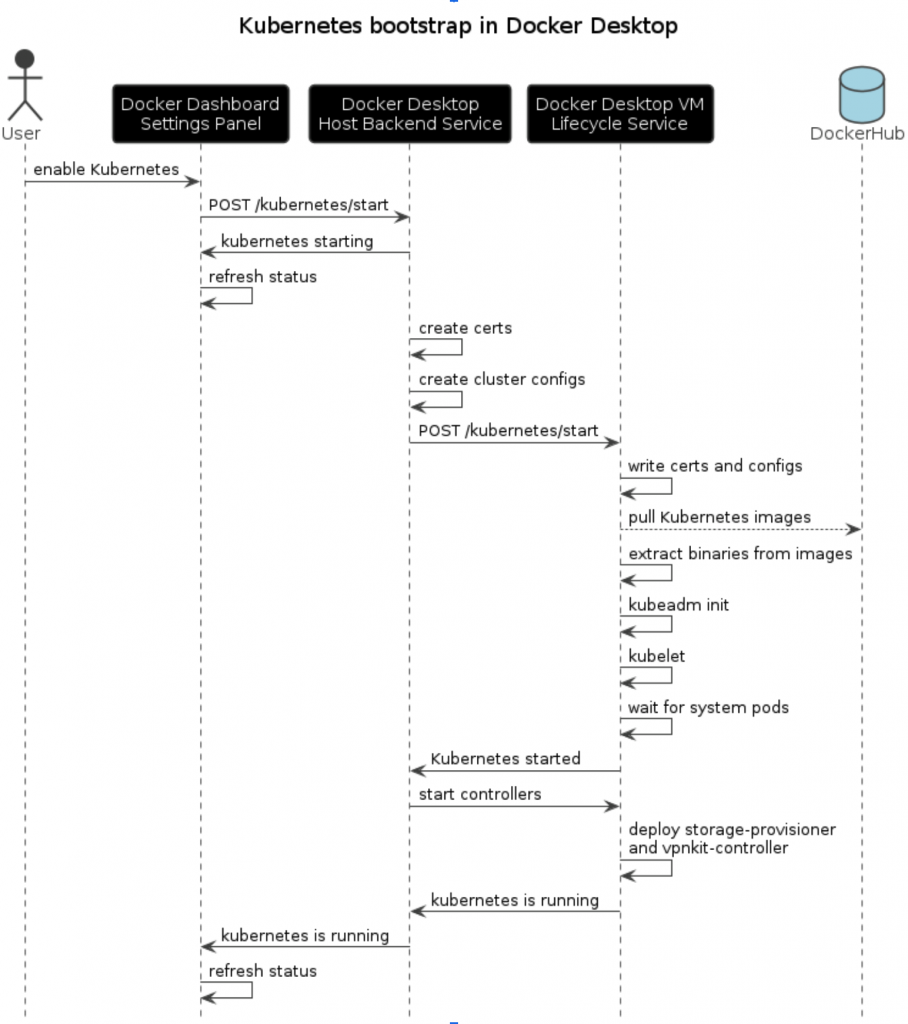

Internally, the following actions are triggered in the Docker Desktop Backend and VM:

- Generation of certificates and cluster configuration

- Download and installation of Kubernetes internal components

- Cluster bootup

- Installation of additional controllers for networking and storage

The diagram below shows the interactions between the internal components of Docker Desktop for the cluster setup.

Generating cluster certs, keys and config files

Kubernetes requires certificates and keys for authenticated connections between its internal components, and with the outside. Docker Desktop takes care of generating these server and client certificates for the main internal services: kubelet (node manager), service account management, frontproxy, api server, and etcd components.

Docker Desktop installs Kubernetes using kubeadm, therefore it needs to create the kubeadm runtime and cluster-wide configuration. This includes configuration for the cluster’s network topology, certificates, control plane endpoint etc. It uses Docker Desktop-specific naming and is not customizable by the user. The current-context, user and cluster names are always set to docker-desktop while the global endpoint of the cluster is using the DNS name https://kubernetes.docker.internal:6443. Port 6443 is the default port the Kubernetes control plane is bound to. Docker Desktop forwards this port on the host which facilitates the communication with the control plane as it would be installed directly on the host.

Download and installation of Kubernetes components

Inside the Docker Desktop VM, a management process named Lifecycle service takes care of deploying and starting services such as Docker daemon and notifying their state change.

Once the Kubernetes certificates and configuration have been generated, a request is made to the Lifecycle service to install and start Kubernetes. The request contains the required certificates (Kubernetes PKI) for the setup.

The lifecycle service then starts pulling all the images of the Kubernetes internal components from Docker Hub. These images contain binaries such as kubelet, kubeadm, kubectl, crictl etc which are extracted and placed in `/usr/bin`.

Cluster bootup

Once these binaries are in place and the configuration files have been written to the right paths, the Lifecycle service runs `kubeadm init` to initialize the cluster and then start the kubelet process. As this is a single-node cluster setup, only one kubelet instance is being run.

The Lifecycle service then waits for the following system pods to be running in order to notify Docker Desktop host service that Kubernetes is started: coredns, kube-controller-manager and the kube-apiserver.

Install additional controllers

Once Kubernetes internal services have started, Docker Desktop triggers the installation of additional controllers such as storage-provisioner and vpnkit-controller. Their roles concern persisting application state between reboots/upgrades and how to access applications once deployed.

Once these controllers are up and running, the Kubernetes cluster is fully operational and the Docker Dashboard is notified of its state.

We can now run kubectl commands and deploy applications.

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 1mChecking system pods at this state should return the following:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-78fcd69978-7m52k 1/1 Running 0 99m

coredns-78fcd69978-mm22t 1/1 Running 0 99m

etcd-docker-desktop 1/1 Running 1 99m

kube-apiserver-docker-desktop 1/1 Running 1 99m

kube-controller-manager-docker-desktop 1/1 Running 1 99m

kube-proxy-zctsm 1/1 Running 0 99m

kube-scheduler-docker-desktop 1/1 Running 1 99m

storage-provisioner 1/1 Running 0 98m

vpnkit-controller 1/1 Running 0 98m

2. Deploying and accessing applications

Let’s take as an example a Kubernetes yaml for the deployment of docker/getting-started, the Docker Desktop tutorial. This is a generic Kubernetes yaml deployable anywhere, it does not contain any Docker Desktop-specific configuration.

apiVersion: v1

kind: Service

metadata:

name: tutorial

spec:

ports:

- name: 80-tcp

port: 80

protocol: TCP

targetPort: 80

selector:

com.docker.project: tutorial

type: LoadBalancer

status:

loadBalancer: {}

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

com.docker.project: tutorial

name: tutorial

spec:

replicas: 1

selector:

matchLabels:

com.docker.project: tutorial

strategy:

type: Recreate

template:

metadata:

labels:

com.docker.project: tutorial

spec:

containers:

- image: docker/getting-started

name: tutorial

ports:

- containerPort: 80

protocol: TCP

resources: {}

restartPolicy: Always

status: {}

On the host of Docker Desktop, open a terminal and run:

$ kubectl apply -f tutorial.yaml

service/tutorial created

deployment.apps/tutorial createdCheck services:

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 118m

tutorial LoadBalancer 10.98.217.243 localhost 80:31575/TCP 12mServices of type LoadBalancer are exposed outside the Kubernetes cluster. Opening a browser and navigating to localhost:80 displays the Docker tutorial.

What needs to be noticed here is that service access is trivial as if running directly on the host. Developers do not need to concern themselves with any additional configurations.

This is due to Docker Desktop taking care of exposing service ports on the host to make them directly accessible on it. This is done via the additional controller installed previously.

Vpnkit-controller is a port forwarding service which opens ports on the host and forwards

connections transparently to the pods inside the VM. It is being used for forwarding connections

to LoadBalancer type services deployed in Kubernetes.

3. Speed up the develop-test inner loop

We have seen how to deploy and access an application in the cluster. However, the development cycle consists of developers modifying the code of an application and testing it continuously.

Let’s take as an example an application we are developing locally.

$ cat main.go

package main

import (

"fmt"

"log"

"net/http"

)

func handler(w http.ResponseWriter, r *http.Request) {

fmt.Println(r.URL.RawQuery)

fmt.Fprintf(w, `

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""\___/ ===

{ / ===-

\______ O __/

\ \ __/

\____\_______/

Hello from Docker!

`)

}

func main() {

http.HandleFunc("/", handler)

log.Fatal(http.ListenAndServe(":80", nil))

}The Dockerfile to build and package the application as a Docker image:

$ cat Dockerfile

FROM golang:1.16 AS build

WORKDIR /compose/hello-docker

COPY main.go main.go

RUN CGO_ENABLED=0 go build -o hello main.go

FROM scratch

COPY --from=build /compose/hello-docker/hello /usr/local/bin/hello

CMD ["/usr/local/bin/hello"]To build the application, we run docker build as usual:

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

$ docker build -t hellodocker .

[+] Building 0.9s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 38B 0.0s

. . .

=> => naming to docker.io/library/hellodocker 0.0sWe can see the image resulting from the build stored in the Docker engine cache.

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

hellodocker latest 903fe47400c8 4 hours ago 6.13MBBut now we have a problem!

Kubernetes normally pulls images from a registry, which would mean we would have to push and pull the image we have built after every change. Docker Desktop removes this friction by using dockershim to share the image cache between the Docker engine and Kubernetes. Dockershim is an internal component of Kubernetes that acts like a translation layer between kubelet and Docker Engine.

For development, this provides an essential advantage: Kubernetes can create containers from images stored in the Docker Engine image cache. We can build images locally and test them right away without having to push them to a registry first.

In the kubernetes yaml from the tutorial example, update the image name to hellodocker and set the image pull policy to IfNotPresent. This ensures that the image from the local cache is going to be used.

...

containers:

- name: hello

image: hellodocker

ports:

- containerPort: 80

protocol: TCP

resources: {}

imagePullPolicy: IfNotPresent

restartPolicy: Always

...Re-deploying applies the new updates:

$ kubectl apply -f tutorial.yaml

service/tutorial configured

deployment.apps/tutorial configured

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

tutorial LoadBalancer 10.109.236.243 localhost 80:31371/TCP 4s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h56m

$ curl localhost:80

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""\___/ ===

{ / ===-

\______ O __/

\ \ __/

\____\_______/

Hello from Docker!To delete the application from the cluster run:

$ kubectl delete -f tutorial.yaml

4. Updating Kubernetes

When this is the case, the Kubernetes version can be upgraded after a Docker Desktop update. However, when a new Kubernetes version is added to Docker Desktop, the user needs to reset its current cluster in order to use the newest version.

As pods are designed to be ephemeral, deployed applications usually save state to persistent volumes. This is where the storage-provisioner helps in persisting the local storage data.

Conclusion

Docker Desktop offers a Kubernetes installation with a solid host integration aiming to work without any user intervention. Developers in need of a Kubernetes cluster without concerning themselves about its setup can simply install Docker Desktop and enable the Kubernetes cluster to have everything in place in a matter of a few minutes.

To get Docker Desktop, follow the instructions in the Docker documentation. It also contains a dedicated guide on how to enable Kubernetes.

Join us at DockerCon 2022

DockerCon is the world’s largest development conference of its kind and it’s coming to you virtually and completely free on May 10th, 2022. DockerCon 2022 is an amazing opportunity for you and your developers to learn directly from the community, get tips, tricks, and best practices that will elevate your Docker knowledge, and to learn about what’s coming up on the Docker Roadmap. You can register for DockerCon now, pre-registration is free and open.

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)