History of Power: The Evolution of the Electric Generation Industry

POWER magazine was launched in 1882, just as the world was beginning to grasp the implications of a new, versatile form of energy: electricity. During its 140-year history, the magazine’s pages have reflected the fast-changing evolution of the technologies and markets that characterize the world’s power sector. These are some of the events that have shaped both the history of power and the history of POWER.

The history of power generation is long and convoluted, marked by myriad technological milestones, conceptual and technical, from hundreds of contributors. Many accounts begin power’s story at the demonstration of electric conduction by Englishman Stephen Gray, which led to the 1740 invention of glass friction generators in Leyden, Germany. That development is said to have inspired Benjamin Franklin’s famous experiments, as well as the invention of the battery by Italy’s Alessandro Volta in 1800, Humphry Davy’s first effective “arc lamp” in 1808, and in 1820, Hans Christian Oersted’s demonstration of the relationship between electricity and magnetism. In 1820, in arguably the most pivotal contribution to modern power systems, Michael Faraday and Joseph Henry invented a primitive electric motor, and in 1831, documented that an electric current can be produced in a wire moving near a magnet—demonstrating the principle of the generator.

Invention of the first rudimentary dynamo is credited to Frenchman Hippolyte Pixii in 1832. Antonio Pacinotti improved it to provide continuous direct-current (DC) power by 1860. In 1867, Werner von Siemens, Charles Wheatstone, and S.A. Varley nearly simultaneously devised the “self-exciting dynamo-electric generator.” Perhaps the most important improvement then arrived in 1870, when a Belgian inventor, Zenobe Gramme, devised a dynamo that produced a steady DC source well-suited to powering motors—a discovery that generated a burst of enthusiasm about electricity’s potential to light and power the world.

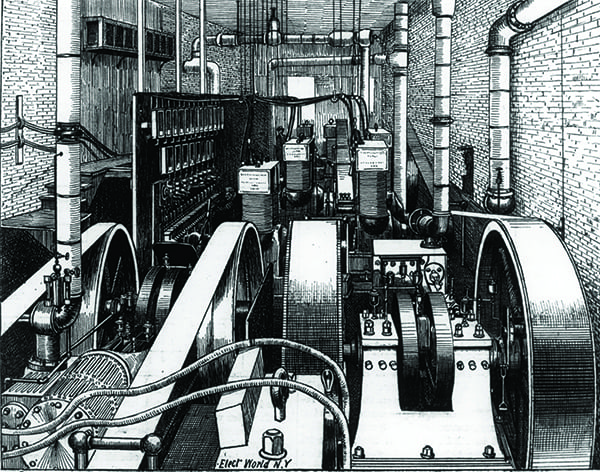

By 1877—as the streets of many cities across the world were being lit up by arc lighting (but not ordinary rooms because arc lights were still blindingly bright)—Ohio-based Charles F. Brush had developed and begun selling the most reliable dynamo design to that point, and a host of forward thinkers were actively exploring the promise of large-scale electricity distribution. Eventually, Thomas Edison invented a less powerful incandescent lamp in 1879, and in September 1882—only a month before the inaugural issue of POWER magazine was published—he established a central generating station at Pearl Street (Figure 1) in lower Manhattan.

1. Pearl Street Station. Thomas Edison in September 1882 achieved his vision of a full-scale central power station with a system of conductors to distribute electricity to end-users in the high-profile business district in New York City. Source: U.S. Department of Energy

Mục Lục

A History Rooted in Coal

Advances in alternating-current (AC) technology opened up new realms for power generation (see sidebar “Tesla and the War of the Currents”). Hydropower, for example, marked several milestones between 1890 and 1900 in Oregon, Colorado, Croatia (where the first complete multiphase AC system was demonstrated in 1895), at Niagara Falls, and in Japan.

Tesla and the War of the Currents

Today, most people recognize the name Tesla as a company that makes electric vehicles, but the real genius behind the name is not Elon Musk—it’s the Serbian-American engineer and physicist Nikola Tesla. Tesla, for all intents and purposes, was the man who pioneered today’s modern alternating-current (AC) electricity supply system.

Tesla was born in 1856 in Smiljan, Croatia, which was part of the Austro-Hungarian Empire at the time. He studied math and physics at the Technical University of Graz in Austria, and also studied philosophy for a time at the University of Prague in what is now the Czech Republic. In 1882, he moved to Paris and got a job repairing direct-current (DC) power plants with the Continental Edison Co. Two years later, he immigrated to the U.S., where he became a naturalized citizen in 1889.

Upon arrival in the U.S., Tesla found a job working for Thomas Edison in New York City, which he did for about a year. Edison was reportedly impressed by Tesla’s skill and work ethic, but the two men were much different in their methods and temperament. Tesla solved many problems through visionary revelations; whereas, Edison relied more on practical experiments and trial-and-error. Furthermore, Tesla believed strongly that AC electrical systems were more practical for large-scale power delivery; whereas, Edison championed DC systems in what has since come to be known as the “War of the Currents.”

Tesla left Edison’s company following a payment dispute over one of Tesla’s dynamo improvements. After his departure, Tesla struggled for a time to find funding to start his own business. When he finally did get his company going, Tesla moved quickly, and over the span of about two years he was granted more than 30 patents for his inventions, which included a whole polyphase system of AC dynamos, transformers, and motors.

Word of Tesla’s innovative ideas spread, leading to an invitation for him to speak before the American Institute of Electrical Engineers. It was here that Tesla caught the eye of George Westinghouse, another AC power pioneer. Soon thereafter, Westinghouse bought the rights to Tesla’s patents and momentum for AC systems picked up.

Perhaps the most important battle in the War of the Currents occurred in 1893 during the World’s Columbian Exposition, the official name of the World’s Fair that year, held in Chicago, Illinois. Westinghouse’s company won the bid over Edison’s company to power the fair with electricity, and it did so with Tesla’s polyphase system.

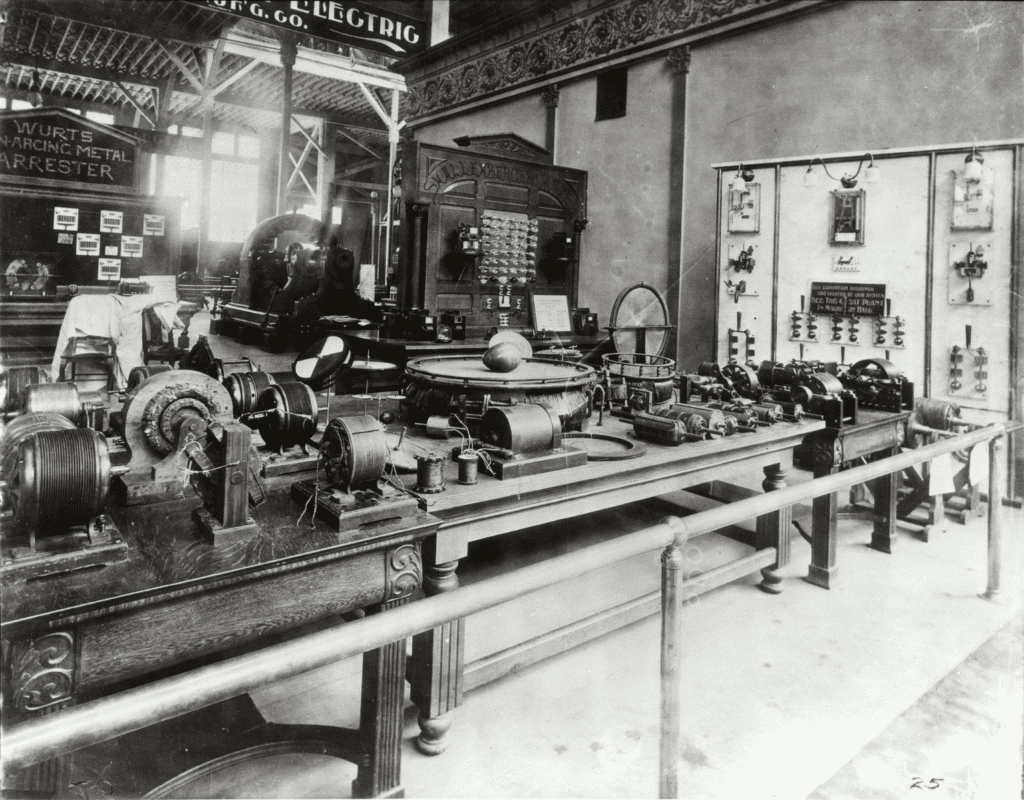

Among the exhibits (Figure 2) displayed at the fair were a switchboard, polyphase generators, step-up transformers, transmission lines, step-down transformers, industrial-sized induction motors and synchronous motors, rotary DC converters, meters, and other auxiliary devices. The demonstration allowed the public to see what an AC power system could look like and what it would be capable of doing. The fact that power could be transmitted over long distances, and utilized even to supply DC systems, opened many peoples’ eyes to the benefits it could provide and spurred movement toward a nationwide AC-powered grid.

2. A Display Filled with Innovation. Westinghouse Electric Corp. exhibit of electric motors built on Nikola Tesla’s patent, and demonstrations of how they function, at the 1893 World’s Columbian Exposition (World’s Fair) in Chicago, Illinois. Also exhibited (front, center) are early Tesla Coils and parts from his high-frequency alternator. Courtesy: Detre Library & Archives, Heinz History Center

In addition to his electrical inventions, Tesla also experimented with X-rays, gave short-range demonstrations of radio communication, and built a radio-controlled boat that he demonstrated for spectators, among other things. Although Tesla was quite famous and respected in his day, he was never able to capture long-term financial success from his inventions. He died in 1943 at the age of 86.

By then, however, coal power generation’s place in power’s history had already been firmly established. The first coal-fired steam generators provided low-pressure saturated or slightly superheated steam for steam engines driving DC dynamos. Sir Charles Parsons, who built the first steam turbine generator (with a thermal efficiency of just 1.6%) in 1884, improved its efficiency two years later by introducing the first condensing turbine, which drove an AC generator.

About a decade later, in 1896, American inventor Charles Curtis offered an invention of a different turbine to the General Electric Co. (GE). By 1901, GE had successfully developed a 500-kW Curtis turbine generator, which employed high-pressure steam to drive rapid rotation of a shaft-mounted disk, and by 1903, it delivered the world’s first 5-MW steam turbine to the Commonwealth Edison Co. of Chicago. Subsequent models, which received improvement boosts suggested by GE’s Dr. Sanford Moss, were used mostly as mechanical drives or as peaking units.

By the early 1900s, coal-fired power units featured outputs in the 1 MW to 10 MW range, outfitted with a steam generator, an economizer, evaporator, and a superheater section. By the 1910s, the coal-fired power plant cycle was improved even more by the introduction of turbines with steam extractions for feedwater heating and steam generators equipped with air preheaters—all which boosted net efficiency to about 15%.

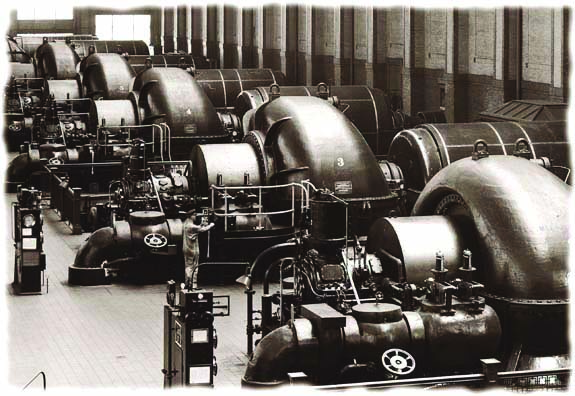

The demonstration of pulverized coal steam generators at the Oneida Street Station in Wisconsin in 1919 vastly improved coal combustion, allowing for bigger boilers (Figure 3). In the 1920s, another technological boost came with the advent of once-through boiler applications and reheat steam power plants, along with the Benson steam generator, which was built in 1927. Reheat steam turbines became the norm in the 1930s, when unit ratings soared to a 300-MW output level. Main steam temperatures consistently increased through the 1940s, and the decade also ushered in the first attempts to clean flue gas with dust removal. The 1950s and 1960s were characterized by more technical achievements to improve efficiency—including construction of the first once-through steam generator with a supercritical main steam pressure.

3. Purely pulverized. The 40-MW Lakeside Power Plant in St. Francis, Wisconsin, began operations in 1921. This image shows the steam turbines and generators at Lakeside, which was the world’s first plant to burn pulverized coal exclusively. Courtesy: WEC Energy Group

Unit ratings of 1,300 MW were reached by the 1970s. In 1972, the world’s first integrated coal gasification combined cycle power plant—a 183-MW power plant for the German generator STEAG—began operations. Mounting environmental concerns and the subsequent passage of the Clean Air Act by the Nixon administration in the 1970s, however, also spurred technical solutions such as scrubbers to mitigate sulfur dioxide emissions. The decade ended with completion of a pioneering commercial fluidized bed combustion plant built on the Georgetown University campus in Washington, D.C., in 1979.

The early 1980s, meanwhile, were marked by the further development of emissions control technologies, including the introduction of selective catalytic reduction systems as a secondary measure to mitigate nitrogen oxide emissions. Component performance also saw vast improvements during that period to the 21st century. Among the most recent major milestones in coal power’s history is completion of the first large-scale coal-fired power unit outfitted with carbon capture and storage technology in 2014 at Boundary Dam in Saskatchewan, Canada (see sidebar “The 2010s Marked Extraordinary Change for Power”).

The 2010s Marked Extraordinary Change for Power

Every one of the 13 decades that POWER magazine has been in print has been definitive for electric generation technology, policy, and business in some significant way, but few have been as transformative as the 2010s. The decade opened just as the global economy began to crawl toward recovery from a historically unprecedented downturn that had bludgeoned industrial production and sent global financial markets into chaos.

The ensuing social consciousness fueled an environmental movement, which policymakers championed. Heightened concerns about climate change—driven by a globally embraced urgency to act—propelled energy transformations across the world, resulting in a clear shift in power portfolios away from coal and toward low- or zero-carbon resources. That shift has come with concerted emphasis on flexibility. Effecting a notable cultural change, the decarbonization movement has been championed by power company shareholders and customers, and some of the biggest coal generators in the world have announced ambitions to go net-zero by mid-century.

However, the shift has also benefited from a hard economic edge. Transformations, for example, have been enabled by technology innovations that cracked open a vast new realm of natural gas supply, sent the prices of solar panels and batteries plummeting, and made small-scale decentralized generation possible, while the uptake of digitalization has soared, mainly to prioritize efficiency gains.

Disruption continues to define the power industry today. The chaotic global pandemic that jolted the world in 2020 unfolded into a precarious set of energy crises in 2021. After a cold snap prompted mass generation outages across a swath of the central U.S., most prominently in Texas, volatile energy markets and power supply vulnerabilities jacked-up turmoil in California, China, India, and across Europe and Latin America. In 2022, Russia’s occupation of Ukraine and its economic war with the West prompted a new set of energy security concerns that have remained pitted against power affordability and sustainability interests.

Gas Power Takes Off

Coal power technology’s evolution was swift owing to soaring power demand and a burgeoning mining sector. The natural gas power sector, which today takes the lion’s share of both U.S. installed capacity and generation, was slower to take off. Although the essential elements of a gas turbine engine were first patented by English inventor John Barber in 1791, it took more than a century before the concepts were really put to practical use.

In 1903, Jens William Aegidius Elling, a Norwegian engineer, researcher, and inventor, built the first gas turbine to produce more power than was needed to run its own components. Elling’s first design used both rotary compressors and turbines to produce about 8 kW. He further refined the machine in subsequent years, and by 1912, he had developed a gas turbine system with separate turbine unit and compressor in series, a combination that is still common today.

Gas turbine technology continued to be enhanced by a number of innovative pioneers from various countries. In 1930, Britain’s Sir Frank Whittle patented a design for a jet engine. Gyorgy Jendrassik demonstrated Whittle’s design in Budapest, Hungary, in 1937. In 1939, a German Heinkel HE 178 aircraft flew successfully with an engine designed by Hans von Ohain that used the exhaust from a gas turbine for propulsion. The same year, Brown Boveri Co. installed the first gas turbine used for electric power generation in Neuchatel, Switzerland. Both Whittle’s and von Ohain’s first jet engines were based on centrifugal compressors.

Innovations in aircraft technology, and engineering and manufacturing advancements during both World Wars propelled gas power technology to new heights, however. At GE, for example, engineers who participated in development of jet engines put their know-how into designing a gas turbine for industrial and utility service. Following development of a gas turbine-electric locomotive in 1948, GE installed its first commercial gas turbine for power generation—a 3.5-MW heavy-duty unit—at the Belle Isle Station owned by Oklahoma Gas & Electric in July 1949 (Figure 4). Some experts point out that because that unit used exhaust heat for feedwater heating of a steam turbine unit, it was essentially also the world’s first combined cycle power plant. That same year, Westinghouse put online a 1.3-MW unit at the River Fuel Corp. in Mississippi.

4. Trailblazer. In 1949, General Electric installed the first gas turbine built in the U.S. for the purpose of generating power at the Belle Isle Station, a 3.5-MW unit, owned by Oklahoma Gas & Electric. Courtesy: GE

Large heavy-duty gas turbine technology rapidly improved thereafter. In the early 1950s, firing temperatures were 1,300F (705C), by the late 1950s, they had soared to 1,500F, and eventually reached 2,000F in 1975. By 1957, a general surge in gas turbine unit sizes led to the installment of the first heat recovery steam generator (HRSG) for a gas turbine. By 1965, the first fully fired boiler combined cycle gas turbine (CCGT) power plant came online, and by 1968, the first CCGT was outfitted with a HRSG. The late 1960s, meanwhile, was characterized by gas turbine suppliers starting to develop pre-designed or standard CCGT plants. GE developed the STAG (steam and gas) system, for example, Westinghouse, the PACE (Power at combined efficiency) system, and Siemens, the GUD (gas and steam) system.

The past few decades, meanwhile, have been characterized by a proliferation of large heavy-duty gas turbines that are highly flexible and efficient, and can combust multiple fuels, including high volumes of hydrogen. That development is rooted in a combustion breakthrough in the 1990s that enabled a “lean premixed combustion process.” Compared to the early days of “dry-low NOx” (DLN) technology, when turbine inlet temperatures (TIT) of 1,350C–1,400C (for the vintage F class) were common, the past two decades have ushered in “advanced class” gas turbines with TIT pushing the 1,700C mark.

Advanced gas turbine technology has also led to new world records in CCGT efficiency and gas turbine power output. Specifically, GE Power announced in March 2018 that the Chubu Electric Nishi-Nagoya power plant Block-1, powered by a GE 7HA gas turbine and Toshiba Energy Systems & Solutions Corp.’s steam turbine and generator technology, had been recognized by Guinness World Records as the world’s “most efficient combined-cycle power plant,” based on achieving 63.08% gross efficiency. In August 2022, Duke Energy’s Lincoln Combustion Turbine Station, powered by a Siemens Energy SGT6-9000HL gas turbine, was certified with the official Guinness World Records title for the “most powerful simple-cycle gas power plant” with an output of 410.9 MW.

Atomic Discoveries

Although the concept of the atom was fairly well developed, scientists had not yet figured out how to harness the energy contained in atoms when the first issue of POWER magazine was published. But 13 years later, in 1895, the accidental discovery of X-rays by Wilhelm Röntgen started a wave of experimentation in the atomic field.

In the years that followed, radiation was discovered by Antoine Henri Becquerel, a French physicist; the Curies—Marie and Pierre—conducted additional radiation research and coined the term “radioactivity”; and Ernest Rutherford, a New Zealand-born British physicist, who many people consider the father of nuclear science, postulated the structure of the atom, proposed the laws of radioactive decay, and conducted groundbreaking research into the transmutation of elements.

Many other scientists were helping advance the world’s understanding of atomic principles. Albert Einstein developed his theory of special relativity, E = mc2, where E is energy, m is mass, and c is the speed of light, in 1905. Niels Bohr published his model of the atom in 1913, which was later perfected by James Chadwick when he discovered the neutron.

Enrico Fermi, an Italian physicist, in 1934 showed that neutrons could split atoms. Two German scientists—Otto Hahn and Fritz Strassman—expanded on that knowledge in 1938 when they discovered fission, and using Einstein’s theory, the team showed that the lost mass turned to energy.

Early Nuclear Reactors

Scientists then turned their attention to developing a self-sustaining chain reaction. To do so, a “critical mass” of uranium needed to be placed under the right conditions. Fermi, who emigrated to the U.S. in 1938 to escape fascist Italy’s racial laws, led a group of scientists at the University of Chicago in constructing the world’s first nuclear reactor.

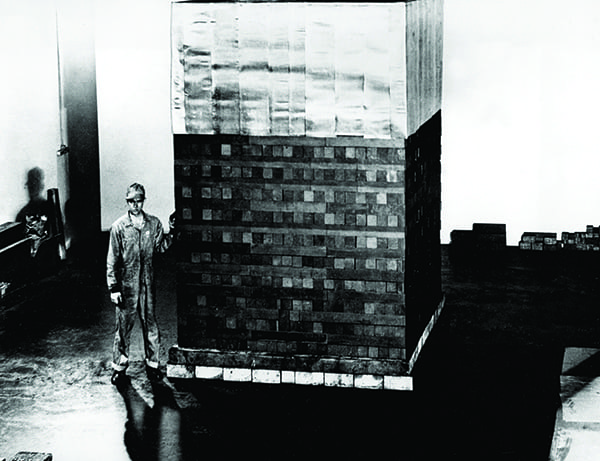

The team’s design consisted of uranium placed in a stack of graphite to make a cube-like frame of fissionable material. The pile, known as Chicago Pile-1, was erected on the floor of a squash court beneath the University of Chicago’s athletic stadium (Figure 5). On December 2, 1942, the first self-sustaining nuclear reaction was demonstrated in Chicago Pile-1.

5. World’s first nuclear reactor. Chicago Pile-1 was an exponential pile. At least 29 exponential piles were constructed in 1942 under the West Stands of the University of Chicago’s Stagg Field. Source: U.S. Department of Energy

But the U.S. was a year into World War II at the time, and most of the atomic research being done then was focused on developing weapons technology. It was not until after the war that the U.S. government began encouraging the development of nuclear energy for peaceful civilian purposes. The first reactor to produce electricity from nuclear energy was Experimental Breeder Reactor I, on December 20, 1951, in Idaho.

The Soviet Union had a burgeoning nuclear power program at the time too. Its scientists modified an existing graphite-moderated channel-type plutonium production reactor for heat and electricity generation. In June 1954, that unit, located in Obninsk, began generating electricity. A few years later, on December 18, 1957, the first commercial U.S. nuclear power plant—Shippingport Atomic Power Station, a light-water reactor with a 60-MW capacity—was synchronized to the power grid in Pennsylvania.

The U.S. and Soviet Union weren’t the only countries building nuclear plants, however. The UK, Germany, Japan, France, and several others were jumping on the bandwagon too. The industry grew rapidly during the 1960s and 1970s. Nuclear construction projects were on drawing boards across the U.S., with 41 new units ordered in 1973 alone. But slower electricity demand growth, construction delays, cost overruns, and complicated regulatory requirements, put an end to the heyday in the mid-1970s. Nearly half of all planned U.S. projects ended up being canceled. Nonetheless, by 1991 the U.S. had twice as many operating commercial reactors—112 units—as any other country in the world.

Nuclear power’s history is tainted by three major accidents. The first was the partial meltdown of Three Mile Island Unit 2 on March 28, 1979. A combination of equipment malfunctions, design-related problems, and worker errors led to the meltdown. The second major accident occurred on April 26, 1986. That event was triggered by a sudden surge of power during a reactor systems test on Unit 4 at the Chernobyl nuclear power station in Ukraine, in the former Soviet Union. The accident and a subsequent fire released massive amounts of radioactive material into the environment.

The most-recent major accident occurred following a 9.0-magnitude earthquake off the coast of Japan on March 11, 2011. The quake caused the Fukushima Daiichi station to lose all off-site power. Backup systems worked, but 40 minutes after the quake, a 14-meter-high tsunami struck the area, knocking some of them out. Three reactors eventually overheated—melting their cores to some degree—then hydrogen explosions spread radioactive contamination throughout the area.

The consequences of accidents have played a role in decisions to phase out or cut back reliance on nuclear power in some countries including Belgium, Germany, Switzerland, and Spain. Nonetheless, China, Russia, India, the United Arab Emirates, the U.S., and others continue to build new units, incorporating a lot of modern power plant technology (see sidebar “The Rise of IIoT”).

The Rise of IIoT

Power plant automation was already well-advanced by 2009, when more facilities shed boiler-turbine generator boards and vertical panels populated with indicators and strip chart recorders, and adopted open systems using industry-standard hardware and software. By then, companies were already beginning to grasp the value of digital modeling and virtual simulation, as well as sensors and wireless technologies.

The definitive shift arrived around 2012 with the introduction of the concept of the “industrial internet of things” (IIoT)—a term GE claims it coined—which described the connection between machines, advanced analytics, and the people who use them. According to GE, IIoT is “the network of a multitude of industrial devices connected by communications technologies that results in systems that can monitor, collect, exchange, analyze, and deliver valuable new insights like never before. These insights can then help drive smarter, faster business decisions for industrial companies.”

In the power sector, IIoT morphed into a multi-billion-dollar industry, and it has since enabled predictive analytics to forecast and detect component issues; it provides real-time production data (Big Data), which has opened up a realm of possibilities; and enabled software solutions—all of which have boosted efficiency, productivity, and performance. Over the last decade, several companies have rolled out comprehensive power plant–oriented IIoT platforms, such as GE’s Predix and Siemens’ MindSphere, and driven the rapid development of technologies that benefit from them, such as fourth-generation sensors, Big Data, edge intelligence, as well as augmented and virtual reality, and artificial intelligence and machine learning.

Separately, these and other digital possibilities have opened up a vast intelligence realm for other aspects of the power sector, including for grid—such as for forecasting, grid stability, outage response, distributed energy resource orchestration, communications, and mobility. Components manufacturing, too, is digitally evolving, with advancements in 3D printing, development of new materials, and process intensification.

Renewables: The World’s Oldest and Newest Energy Sources

While humans have been harnessing energy from the sun, wind, and water for thousands of years, technology has changed significantly over the course of history, and these ancient energy types have developed into state-of-the-art innovative power generation sources.

Chasing Water. What became modern renewable energy generation got its start in the late 1800s, around the time that POWER launched. Hydropower was first to transition to a commercial electricity generation source, and it advanced very quickly. In 1880, Michigan’s Grand Rapids Electric Light and Power Co. generated DC electricity using hydropower at the Wolverine Chair Co. A belt-driven dynamo powered by a water turbine at the factory lit 16 arc street lamps.

Just two years later, the world’s first central DC hydroelectric station powered a paper mill in Appleton, Wisconsin. By 1886, there were 40 to 50 hydroelectric plants operating in the U.S. and Canada alone, and by 1888, roughly 200 electric companies relied on hydropower for at least some of their electricity generation (see sidebar “The Evolution of Power Business Models”). In 1889, the nation’s first AC hydroelectric plant came online, the Willamette Falls Station in Oregon City, Oregon.

The Evolution of Power Business Models

The birth of the modern electric utility began when Thomas Edison invented the practical lightbulb in 1878, and, to spur demand for the novel invention, developed an entire power system that generated and distributed electricity. While the concept caught on in several cities in the early years, owing to exorbitant costs, power companies rarely owned several power plants.

Samuel Insull, who began his role as president at Chicago Edison in 1892, is credited with first exploiting load factor, not only finding power customers for off-peak times, but leveraging technology including larger generation systems and alternating current to produce and transmit power more cheaply. Crucially, he is also said to have pioneered consolidation; he acquired 20 small utilities by 1907 to give birth to a natural monopoly—Commonwealth Edison.

The model was widely emulated, and for decades, scale economies associated with large centralized generation technologies encouraged vertical integration to drive down power costs, encourage universal access, and provide reliability. To enforce the responsibilities and rights of these investor-owned utilities (IOUs) and their customers, however, this approach also spurred more government oversight and regulation. It also gave rise to both municipal ownership, and later, as a New Deal measure, public power to enable rural electrification.

Business models began to shift more distinctively starting in the late 1970s, as environmental policy, the oil shocks, and initiatives to open the airline and trucking industries to competition disrupted the status quo. The evolution that happened in the last 40 years, which has left an enduring legacy, is often categorized into two big buckets: the introduction of competition, and reforming the way monopoly utilities operate. A key step to initiating reforms in the U.S. stemmed from passage of the Public Utilities Regulatory Policies Act (PURPA) of 1978, which essentially created opportunities for smaller generators to get into the game.

The final, but just as significant, historical upheaval was energy deregulation. In the 1990s, holding competition as the most effective driver of low power costs, several states also moved to end monopoly protections for retail sales—though after California’s high-profile energy crisis in 2000 and 2001, many scaled back their plans and even re-regulated their retail sectors. Deregulation had the important role of giving rise to new retail companies, whose business models were exclusively focused on delivery of power to the customer.

If and how these models thrive, however, is questionable. Decarbonization, decentralization, and digitalization—as well as the recent impact from the COVID-19 pandemic—have slackened or prompted a decline in power demand. At the same time, traditional regulatory approaches, which have been predicated on growth, must grapple with an increasingly complex industry landscape. The past decades have ushered in two-way flows of electricity, more stringent environmental policies, and state goals. Regulators are challenged with ensuring power systems are operating efficiently, complying with environmental goals, remain reliable, and address equity issues for low-income customers.

Internationally, Switzerland was at the forefront of pumped storage, opening the world’s first such plant in 1909. Pumped storage wasn’t integrated into the U.S. energy mix until 1930 when Connecticut Electric Light and Power Co. erected a pumped storage plant in New Milford, Connecticut.

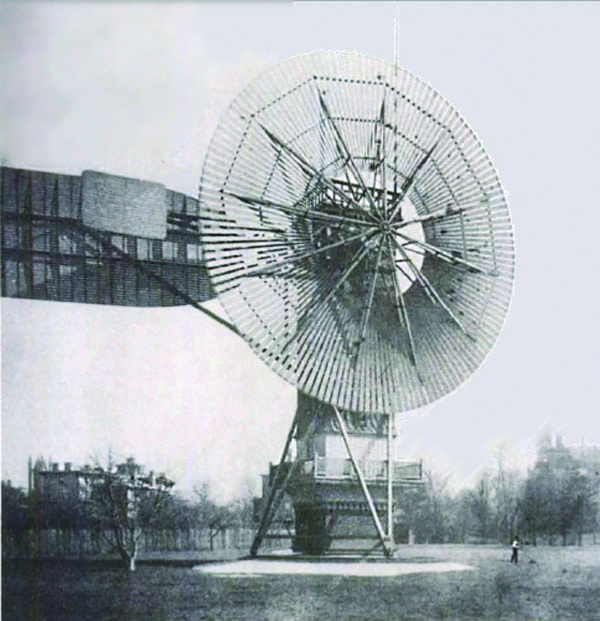

Blowing in the Wind. At about the same time that hydropower was gaining popularity, inventors were also figuring out how to use the windmills of the past to generate electricity for the future. In 1888, Charles Brush, an inventor in Ohio, constructed a 60-foot wind turbine in his backyard (Figure 6). The windmill’s wheel was 56 feet in diameter and had 144 blades. A shaft inside the tower turned pulleys and belts, which spun a 12-kW dynamo that was connected to batteries in Brush’s basement.

6. Birth of the wind turbine. In 1888, Charles Brush, an inventor in Ohio, constructed a 60-foot wind turbine capable of generating electricity in his backyard. Source: Wikimedia Commons

Wind-powered turbines slowly and with little fanfare spread throughout the world. The American Midwest, where the turbines were used to power irrigation pumps, saw numerous installations. In 1941, the world saw the first 1.25-MW turbine connected to the grid on a hill in Castleton, Vermont, called Grandpa’s Knob.

Interest in wind power was renewed by the oil crisis of the 1970s, which spurred research and development. Wind power in the U.S. got a policy boost when President Jimmy Carter signed the Public Utility Regulatory Policies Act of 1978, which required companies to buy a certain amount of electricity from renewable energy sources, including wind.

By the 1980s, the first utility-scale wind farms began popping up in California. Europe has been the leader in offshore wind, with the first offshore wind farm installed in 1991 in Denmark. According to Wind Europe, Europe had 236 GW of installed wind capacity at the end of 2021, a significant increase from the 12.6 GW of capacity that was grid-connected five years earlier.

In late 2016, the first offshore wind farm in the U.S., a five-turbine, 30-MW project, began operation in the waters off of Block Island, Rhode Island. Yet, by 2022, only one additional offshore wind project had been added to the U.S. grid—the two-turbine Coastal Virginia Offshore Wind pilot project with a generating capacity of 12 MW. However, onshore wind installations have fared much better. By mid-2022, more than 139 GW of onshore wind capacity was grid-connected in the U.S., according to American Clean Power, a renewable energy advocacy group.

Let the Sunshine In. Compared to other commercially available renewable energy sources, solar power is in its infancy, though the path that led to its commercial use began almost 200 years ago. In 1839, French scientist Edmond Becquerel discovered the photovoltaic (PV) effect by experimenting with an electrolytic cell made of two metal electrodes in a conducting solution. Becquerel found that electricity generation increased when it was exposed to light.

More than three decades later, an English electrical engineer named Willoughby Smith discovered the photoconductivity of selenium. By 1882 the first solar cell was created by New York inventor Charles Fritts, who coated selenium with a layer of gold to develop a cell with an energy conversion rate of just 1–2%.

It wasn’t until the 1950s, however, that silicon solar cells were produced commercially. Physicists at Bell Laboratories determined silicon to be more efficient than selenium. The cell created by Bell Labs was “the first solar cell capable of converting enough of the sun’s energy into power to run everyday electrical equipment,” according to the U.S. Department of Energy (DOE).

By the 1970s, the efficiency of solar cells had increased, and they began to be used to power navigation warning lights and horns on many offshore gas and oil rigs, lighthouses, and railroad crossing signals. Domestic solar applications began to be viewed as sensible alternatives in remote locations where grid-connected options were not affordable.

The 1980s saw significant progress in the development of more-efficient, more-powerful solar projects. In 1982, the first PV megawatt-scale power station, developed by ARCO Solar, came online in Hesperia, California. Also in 1982, the DOE began operating Solar One, a 10-MW central-receiver demonstration project, the first project to prove the feasibility of power tower technology. Then, in 1992, researchers at the University of South Florida developed a 15.9%-efficient thin-film PV cell, the first to break the 15% efficiency barrier. By the mid-2000s, residential solar power systems were available for sale in home improvement stores.

In 2016, solar power from utility-scale facilities accounted for less than 0.9% of U.S. electricity generation. However, the solar industry has gained significant momentum since then. According to the Solar Energy Industries Association, there was more than 126 GW of solar power capacity installed in the U.S. at the end of March 2022, and the U.S. Energy Information Administration reported that almost 4% of U.S. electricity came from solar energy in 2021. (For more details on the history of all power generation types, see supplements associated with this issue at powermag.com.) ■

—Sonal Patel is a POWER senior associate editor, and Aaron Larson is executive editor of POWER. Abby Harvey, a former POWER reporter, also contributed to this article.

[Ed. note: This article was originally published in the October 2017 issue of POWER. Updates are made to the article on a regular basis.]

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)