What Is Network Latency? Typical Causes & Ways to Reduce It – Sematext

So you finally launched your service worldwide, great! The next thing you’ll see is thousands and thousands of people flooding into your amazing website from all corners of the world expecting to have the same experience regardless of their location.

Here is where things get tricky. Having an infrastructure that will support the expansion of your service across the globe without sacrificing user experience is going to be real though, as the distance will introduce latency.

So in this post we will go through everything you need to know about latency, from what it is to common causes and how you can measure and reduce it to improve performance and ensure a flawless experience for all your users regardless of their location around the world.

Mục Lục

Definition: What Is Network Latency?

Network latency, sometimes called network lag, is the time it takes for a request to travel from the sender to the receiver and for the receiver to process that request. In other words, latency meaning in networking refers to the time it takes for the request sent from the browser to be processed and returned by the server.

When communication delays are small, it’s called low-latency network and longer delays, high-latency network. Any delay affects website performance.

Consider a simple e-commerce store that caters to users worldwide. High latency will make browsing categories and products very difficult and in a space as competitive as online retailing, the few extra seconds can amount to a fortune in lost sales.

How Does Network Latency Affect Website Performance?

There are no two ways about it. Your app will react differently based on your user’s location and it has to do with something we call server latency.

The experience of your customers living in a different part of the world will be vastly different from what you are seeing in your tests. It’s crucial to gather data from every location that your users live in, that way you can take the necessary steps to fix the issue.

Providing an overall good service across the world is not just good for your image, it’s good for your business. You’ll soon come to realize that bad user experience will affect your bottom line directly.

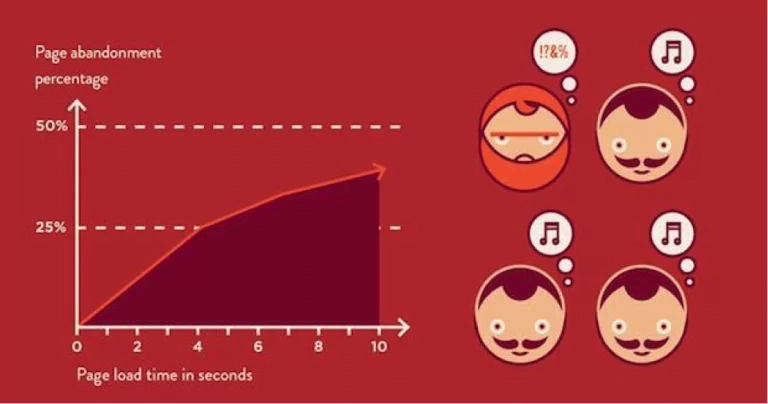

source: fastcompany.com

To use the eCommerce example again here is a little quote from Yoast that will put things into perspective: 79% of online shoppers say they won’t go back to a website if they’ve had trouble with load speed.

And let’s not forget that fast-loading sites have higher conversion rates and lower bounce rates. So it’s not a matter of whether you should or shouldn’t invest in optimizing website speed across multiple regions, but rather if you can afford not to.

What Causes Network Latency?

There are thousands of little variables that make up your network latency but they mainly fall under one of these 4 categories:

- Transmission mediums – Your data travel across large distances in different forms, either through electrical signals over copper cabling, light waves over fiber optics. Every time it switches from one medium to another a few extra milliseconds will be added to the overall transmission time.

- Payload – The more data gets transferred the slower the communication between client and server.

- Routers – Take time to analyze the header information of a packet and, in some cases, add additional information. Each hop a packet takes from router to router increases the latency time. Furthermore, depending on the device there can be MAC address and routing tables lookups.

- Storage Delays – Delays can occur when a packet is stored or accessed. This results in a delay caused by intermediate devices like switches and bridges.

What Is a Good Latency?

Network latency is measured in milliseconds (ms). During speed tests, it’s referred to as ping rate. Obviously, the closer the latency is to zero, the better. But that’s not possible. There’s always going to be some delay created by the client itself as the browser needs time to process the requests and render the response.

Taking that into consideration along with the fact that each case is unique in its own way, we can’t say there’s a universal number as to what a good latency is. In general, however, it’s considered that an acceptable latency is anything under 150 milliseconds. That means all the elements of your website, from images to scripts, dynamic elements, and everything in between, need to load in under 3 seconds.

3 seconds might sound enough to load a few kb of data, but the truth is that you should be very mindful about the size and complexity of your code and requests as they will take a while to load and render.

Best Practices for Monitoring and Improving Network Latency

Latency has a direct impact on your business as it will affect how your users will interact with your services. Time is a very important commodity and you can’t just expect your users will wait patiently for things to load.

You want to keep an eye on your latency and make sure it won’t go up for any reason but that’s not enough. You also have to keep a close eye on your competitors and make sure you are losing any ground to them when it comes to the quality of your service.

How to Check Network Latency

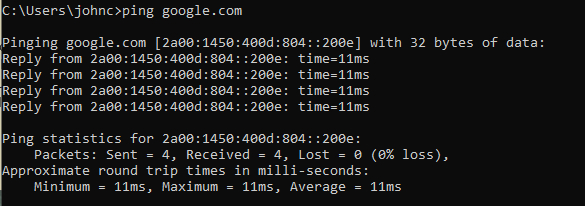

The next question you might ask yourself is how you actually check the latency of your website or app. If you are not picky, you could just ping the server using the command tool on Mac, Windows, and Linux and check out the RTT.

You open the command prompt or terminal window and type in “cmd google.com” (or any other domain).

The RTT or round trip time will basically tell you what the latency of that particular site is.

And if this would have been enough, it’s here where I would have stopped, but unfortunately, things are not that simple. The test I’ve mentioned above will give you an idea of what your latency is at the moment but that’s not to say your latency will not change over time. In fact, it won’t even best describe what your users might experience right now.

How to Measure Network Latency

Network Latency is measured by two metrics – Time to First Byte and Round Trip Time. You can use either of them for the test on your network. However, regardless of what you choose, make sure to keep all records in the same test category. It’s important to monitor any changes and address the culprits if you want to ensure a smooth user experience and keep your customer satisfaction score high.

Time to First Byte

Time to First Byte (TTFB) is the time the first byte of each file reaches the user’s browser after a server connection has been established.

The TTFB itself is affected by three main factors:

- The time it takes for your request to propagate through the network to the web server

- The time it takes for the web server to process the request and generate the response

- The time it takes for the response to propagate back to your browser

Round Trip Time

Round Trip Time (RTT), also called Round Trip Delay (RTD) is the duration it takes for a browser to send a request and receive a response from a server. RTT is perhaps the most popular metric involved in measuring network latency and it is measured in milliseconds (ms).

RTT is influenced by a few key components of your network:

- Distance – The bigger the distance between server and client the longer it takes to get the signal back.

- Transmission medium – The medium used to route a signal. Could be copper cable, optical fiber, wireless or satellite which all are fairly different and can affect your RTT drastically.

- The number of network hops – Intermediate routers or servers take time to process a signal, increasing RTT. The more hops a signal has to travel through, the higher the RTT, resulting in a higher latency.

- Traffic levels – RTT typically increases when a network is congested with high levels of traffic. Conversely, low traffic times can result in decreased RTT.

How to Reduce Network Latency

So you’ll be dealing with a user base that’s spread across multiple continents, you understand the importance of keeping your performance in good standing regardless of the user location and you even understand what you need to look for. All there’s left is the “how” part – how to reduce latency to ensure the best user experience. There are two types of monitoring approaches that will help improve network latency: synthetic monitoring and real user monitoring.

Synthetic monitoring tools such as Sematext Synthetics will let run artificial (hence the name synthetic) calls to your APIs and watch for any increase in latency or performance degradation. However, synthetic transactions are not going to show you how your users are using your services and what their experience is. But real user monitoring, RUM or end user experience monitoring, is.

In the past few years, the popularity of real user monitoring tools grew exponentially as a by-product of the increased number of companies that took their services and products globally. An ever-growing need to monitor and understand user behavior led to a lot of monitoring companies switching from just monitoring server resources to looking at how users experience the website.

This is where Sematext Experience comes into play. It’s a RUM tool that provides insightful data about how your webapp is performing in all the different corners of the world by measuring data in real time directly from your users.

The solution offers a real-time overview of your users’ interactions with the website, allowing you to pinpoint exactly where your system is underperforming.

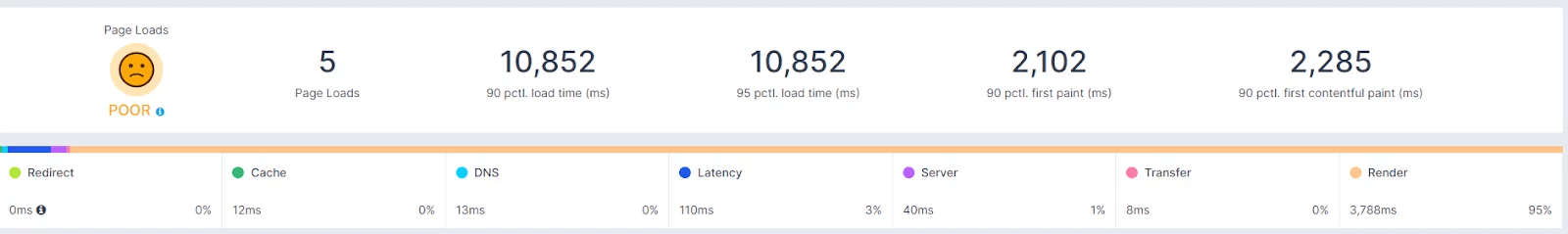

The advanced dashboard dedicated to Page Loads, allows you to see all the details on what causes latency and provides a breakdown by each category, from DNS lookup time to server processing time or render time. From this, you can extrapolate where your weak spots are and what areas you need to prioritize.

Besides providing key information on how the website is performing across different locations, it will also provide intel on how the website loads on different devices running at different connection speeds.

Here’s a quick little overview of Sematext Experience:

How to Troubleshoot Network Latency Issues

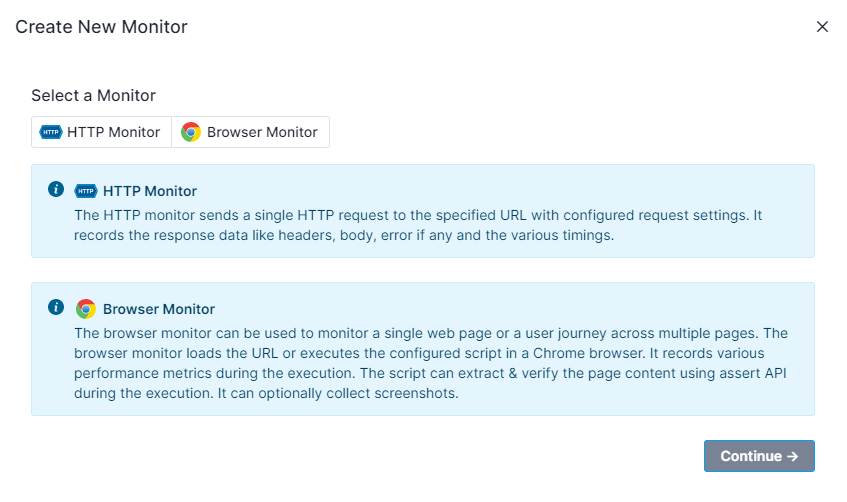

With tools like Sematext, troubleshooting network latency issues is going to be a breeze. You need to set up what we call monitors, that will run specific tests on an endpoint, resource, or website to test against a predefined set of values. If the test fails, you get alerted and can jump in and figure out what affects the latency by looking at the error log.

Sematext Synthetics will let you automate calls to your services and APIs to ensure you are always on the lookout for latency degradation so that you can jump in front of the issue before your users get to experience it.

Here’s how it’s done.

Once you have passed the signup, you have to create a Synthetic app. The process is similar for the real user monitoring tool Experience so I’ll just do one example here.

Depending on whether you want to monitor a resource or a website you’ll pick one or the other.

You must give it a name and set an interval for your tests as well as picking a location from where you want the tests to run.

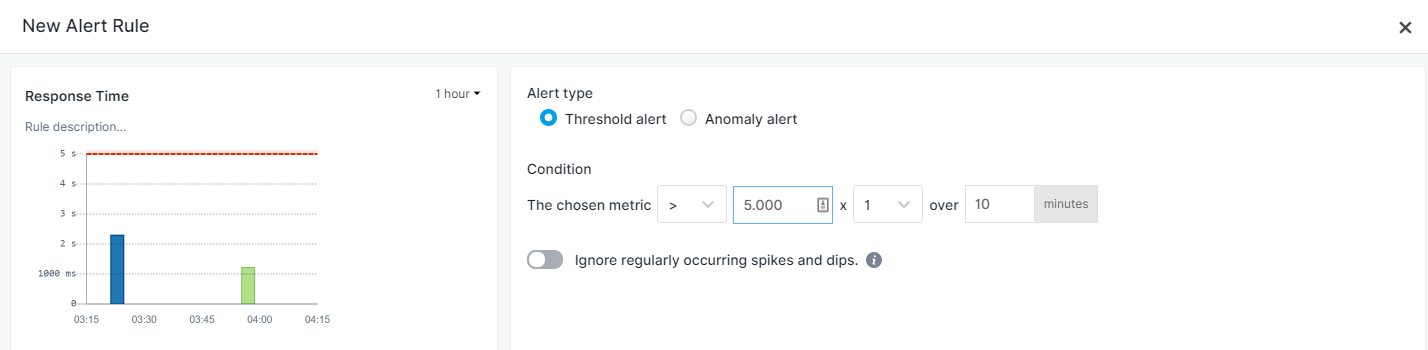

Next up, you create the alert I’ve mentioned previously. You need to spend time to understand what each metric represents in order to keep a close eye on the ones that are relevant to your users and could have affected network latency

The second these alerts fail, you’ll be notified through your preferred notification channel.

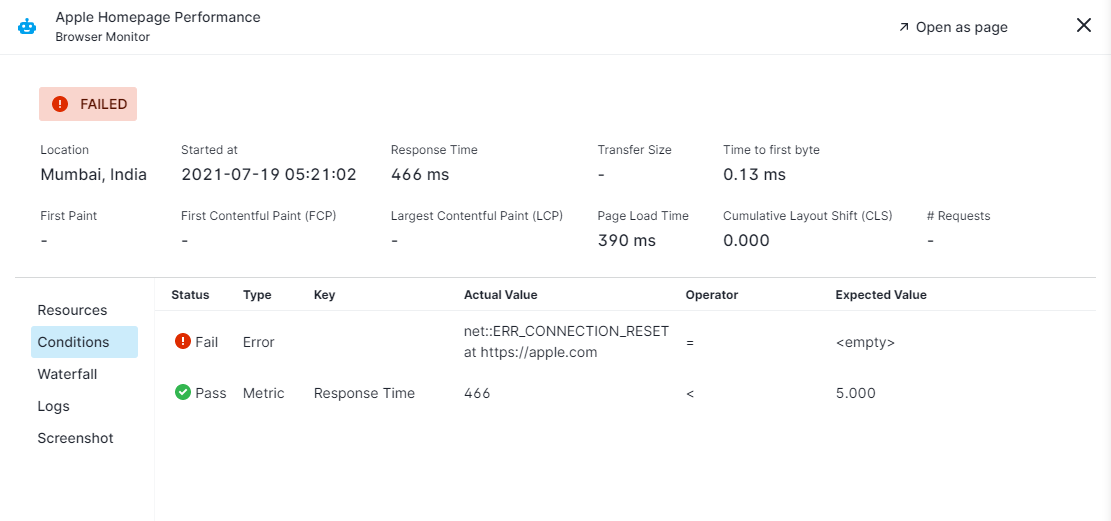

You can click the link you got in the message or go to your monitors and click on the failed run. This is where you get all the information related to the issue that caused network latency and will help you dig to the core of the problem in no time.

Conclusion

Understanding how the users’ experience influences whether they will return to the site or not, which in turn, will directly impact your bottom line.

Latency is always going to influence how your website performs but with the proper tooling, you can mitigate its impact by addressing the main issues causing the latency in the first place. But if you’re interested in providing the best possible experience to your users, you should keep an eye on more than network latency, so check out this article where we explain what are the most important UX metrics you should monitor to improve website performance.

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)