What Does Backbone Mean in Neural Networks? | Baeldung on Computer Science

Mục Lục

1. Intro

Neural networks are machine learning algorithms that we can use for many applications, such as image classification, object recognition, predicting complex patterns, processing language, and many more. The main components of neural networks are layers and nodes.

Some neural network architectures have more than a hundred layers and several logical parts that solve different subproblems. One such part is a neural network backbone.

In this tutorial, we’ll describe what the backbone is and what are the most popular types of backbones.

2. Neural Networks

Neural networks are algorithms explicitly designed as an inspiration for biological neural networks. Initially, the goal was to create an artificial system that functioned similarly to the human brain. Neurons and layers are the primary components of neural networks.

Depending on the type of layers and neurons, there are three main categories of neural networks:

For instance, convolutional neural networks work better with images, while recurrent neural networks with a sequence type of data. To explain what the backbone represents in neural networks, we’ll take an example of backbones in convolutional neural networks. Moreover, in most cases in the literature, backbones are in the context of convolutional neural networks.

3. Convolutional Neural Networks

A convolutional neural network (CNN) is an artificial neural network that we primarily use to classify images, localize objects, and extract features from the images, such as edges or corners. The success of CNNs is because they can process large amounts of data, such as images, videos, and text.

These networks use convolution operations to process input data. When images are input, CNNs can learn different features. For example:

- Initial layers of the network learn low-level features such as lines, dots, curves, and similar.

- Layers in the middle of the network learn objects built on top of low-level features.

- The layers on top can understand high-level features based on the features of the previous layers and do the assigned task.

In addition to that, it’s possible to use pre-trained networks that have been trained using different data. This is possible thanks to the transfer learning technique. For example, a neural network learns some patterns on one data set and, with a little tweaking, can utilize them with another data set. Of course, the more similar the data sets are, the better results we can expect.

4. Backbone in Neural Network

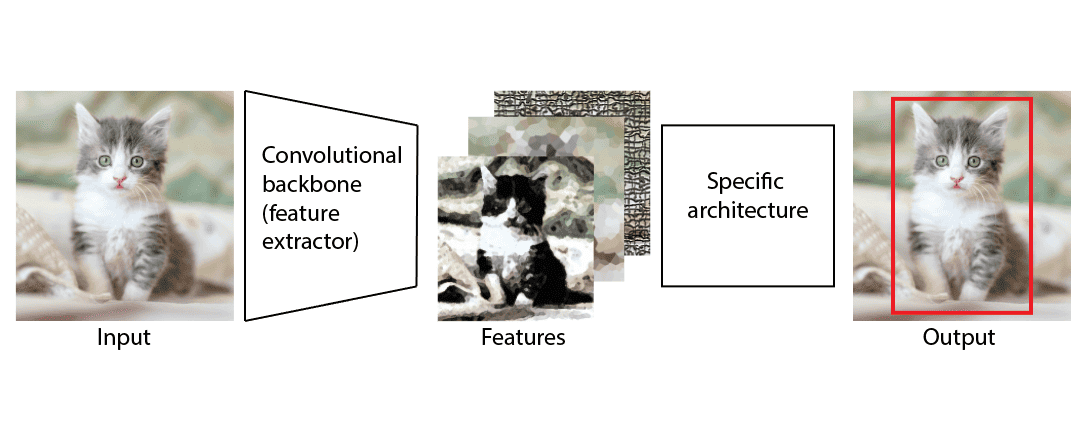

In addition to image classification, more complicated CNN architectures can solve different computer vision tasks, such as object detection or segmentation. Thanks to transfer learning, we can build architecture for object detection on top of another CNN that was originally trained for image classification. In this case, we use CNN as a feature extractor, and it’s, in fact backbone of the model for object detection:

Generally, the term backbone refers to the feature-extracting network that processes input data into a certain feature representation. These feature extraction networks usually perform well as stand-alone networks on simpler tasks, and therefore, we can utilize them as a feature-extracting part in the more complicated models.

There are many popular CNN architectures that we can use as a backbone in neural networks. Some of them include:

- VGGs – includes VGG-16 and VGG-19 convolutional networks with 16 and 19 layers. They proved effective in many tasks and especially in image classification and object detection.

- ResNets – or Residual neural networks consist of skip connections or recurrent units between blocks of convolutional and pooling layers. Some popular versions of ResNet-50 and ResNet 101 are common for object detection and semantic segmentation tasks.

- Inception v1 – GoogleNet is one of the most used convolutional neural networks as a backbone for many computer science applications, including video summarization and action recognition.

5. Conclusion

In this article, we have described what the backbone represents in neural networks and what are the most popular backbones. Mostly, we use the term backbone in computer vision. It represents a popular CNN with a feature extraction function for more complex neural network architecture.

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)