Simplified Docker Networking for Developers

When I joined Docker Inc., my hiring manager had mentioned to me that everything they do is fairly simple except Networking. While most will agree to that statement, it’s even more true from a developer standpoint. If you search for any Docker Networking article, it invariably gets into the details of network namespaces, netns commands, ip links, iptables, IPVS, veths, mac addresses, and so on. While the underpinnings are important, what most of them fail to do is to paint a simple picture for Devs who are looking to understand the overall traffic flow and how communication between containers work. And that’s a common pain point I have heard from most of my customers. Hence, I thought to simplify this convoluted piece. Hope you find my attempt useful.

Let’s start by talking about networking in general which is to make computers (VMs and devices) talk to each other. Networking primarily relies on Bridge (or switch) and Router. Interestingly most of us are already using these components in our home – to connect our devices at home with internet. If you need a refresher check out this link on home networking. Docker containers are no different than those devices and to connect containers we need a similar setup. There are 2 parts to establishing connectivity – connecting containers running on single VM and connecting containers spread across VMs (nodes).

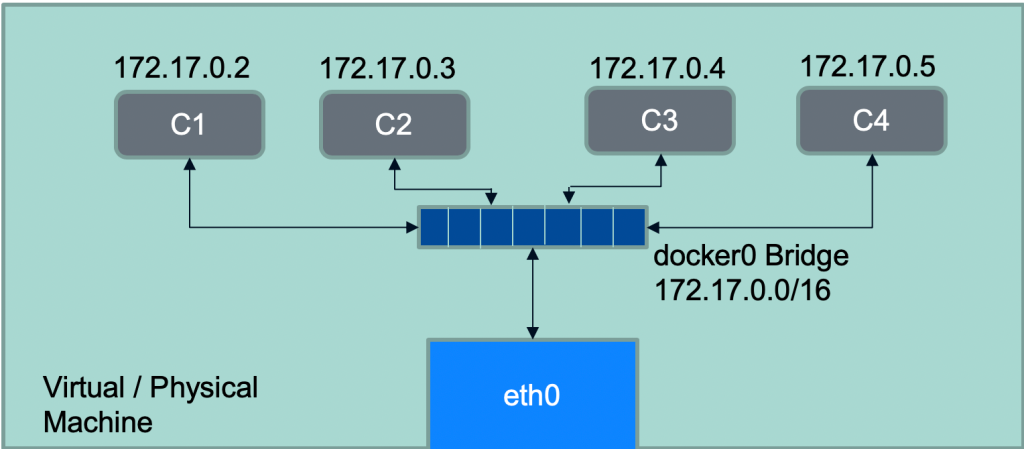

Connecting containers on a single VM is simple and I guess well understood. Docker uses a virtual bridge to connect all the containers running on a single VM. This bridge is called ‘Docker0’ and all the containers running on a standalone docker instance are connected to this bridge. The one end of the bridge is connected to host ethernet. This allows for inbound traffic to containers and outbound traffic from containers for accessing external services. The docker0 also has a default IP CIDR range of 172.17.0.0/16 assigned, out of which individual container get their own IP address. To access the container externally you can map a host port to container port something similar to “docker container run -d -p 8585:80 nginx”. With that you should be able to browse to NGINX container using <host-ipaddress>:8585.

Docker containers communicating on a single VM

Docker containers communicating on a single VM

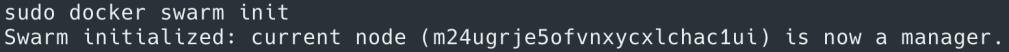

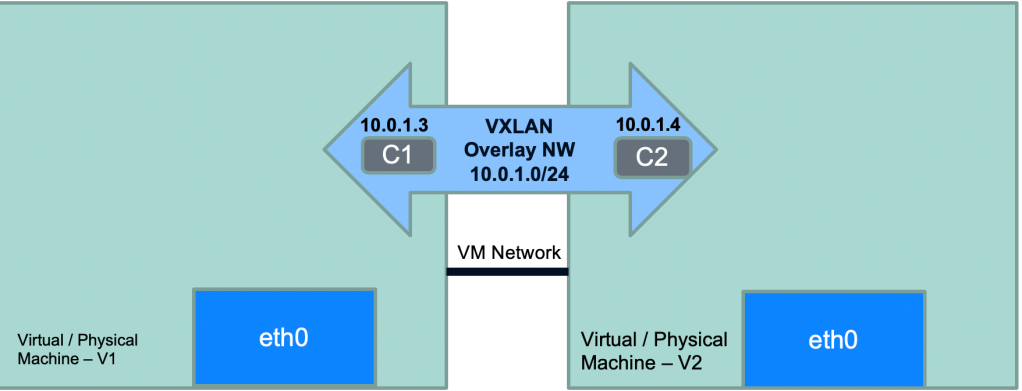

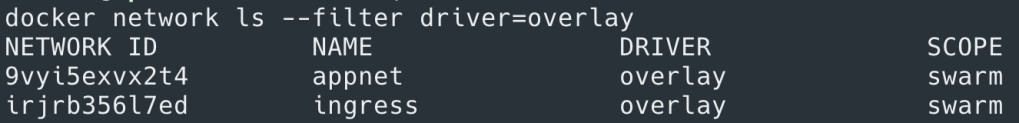

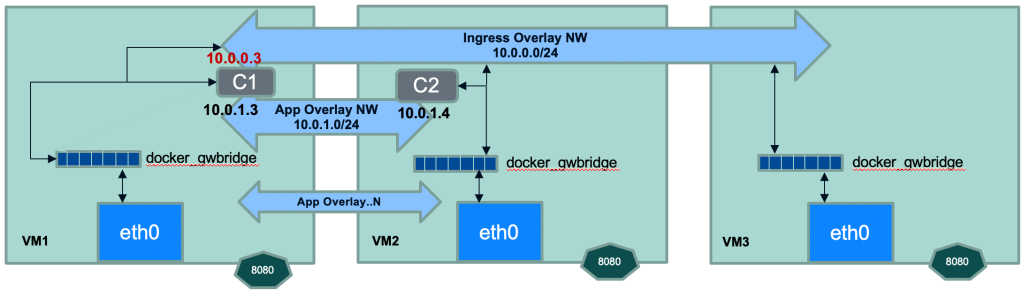

Now what about the containers running across nodes which is typical with Swarm – running Docker containers at scale. How do we connect all these containers? While the VMs themselves are typically connected via network, they don’t have any clue about containers running on them and the IPs assigned to those containers. It would be very tedious to do any physical network changes to enable this communication. That’s where Docker leverages software defined networking, using VXLAN based overlay networks (note VXLAN overlay is one of the network drivers supported by Docker; for more comprehensive description on Docker Networking refer to this article). When you initialize Swarm on a docker node (“docker swarm init”) it creates two networks by default – docker_gwBridge & ingress (will get to ingress at the end) to enable cross node container communication.

Let’s take an example – consider you have two UI and DB containers let’s say C1 & C2, and you want C1 container to communicate to C2. In this case both C1 & C2 containers are running on different nodes. To make this communication possible you will have to create a user defined overlay network and create C1 and C2 containers attached to it.

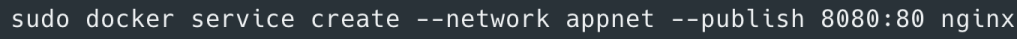

docker network create --driver overlay appnet docker service create --network appnet nginx # Attach UI or DB to Overlay

Yes it’s that simple. Actually when you create the overlay network ‘appnet’ Docker assigns it a CIDR range and attached containers are assigned IPs from that range. You can also customize this CIDR pool for all overlay networks at time of swarm initialization with “docker swarm init –default-addr-pool <ip-range>” or –subnet flag for individual networks.

![]()

Here’s a diagrammatic view of the above setup – Overlay N/W with CIDR range of 10.0.1.0/24 connecting individual containers of IP 10.0.1.3 and 10.0.1.4.

Docker containers communicating across VMs using Overlay network

Docker containers communicating across VMs using Overlay network

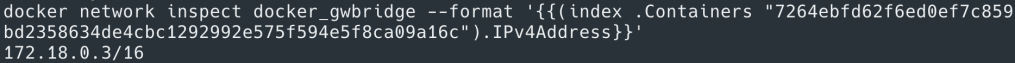

So is that all? Mostly yes, but we still need to figure out the inbound and outbound access for these containers. Let’s start with external access – how do the containers running on these overlay networks reach out to external ecosystem? What if the database we just mentioned is not running in the Swarm cluster but outside of it? For standalone Docker nodes this was carried out by connecting all containers to Docker0 bridge. Following a similar pattern in Swarm mode, we use a Gateway bridge – which is created on every node at the time of node joining the Swarm cluster. Each container in an overlay network has its gateway endpoint attached to Docker gateway bridge for external access. To verify this you can inspect docker_gwbridge network to find the container ID of the attached container as show below.

Docker containers communicating across nodes using overlay and docker_gwbridge networks

Docker containers communicating across nodes using overlay and docker_gwbridge networks

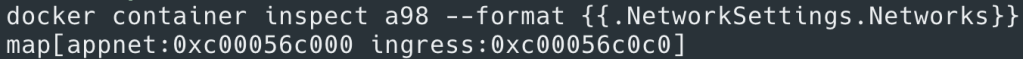

You might think why the gateway bridge is connected with individual container and not to VLAN as a whole? That’s because containers can be running on any node and having a single gateway bridge for the entire VLAN would be unreliable and slow. That brings us to the last component inbound access to containers. You might be tempted to think it should be straight forward. After all, every container has outbound access through docker_gwbridge and just like docker standalone approach we can use ‘-p’ flag to allow access via host port. But things aren’t that simple. While port based host access should work, you are pinning your access to specific node and then if the container were to move to different node you will have to change your request URL to point to that node. Take a pause and think over it. To solve this puzzle Docker uses ‘Ingress’ network. Ingress (also called routing mesh) is just another overlay network to which every node in docker cluster is connected via docker_gwbridge. The idea is you still can publish your app on a specific port just like standalone Docker, but now the traffic would be routed to the underlying app regardless of the node the request landed on. This is the routing magic – your request lands on node #3 it still gets routed to container running on node #1. This magic is done by attaching all the app containers which expose published port to ingress overlay in existing to their overlay networks (see the diagram below where container ‘C1’ is attached to both App Overlay & Ingress Overlay). This is also the reason why you will containers like C1 having dual IPs. This attachment of containers to both ingress and app overlay networks is necessary, without which there is no way for ingress to route request. Remember overlay networks are isolated from any external traffic and hence container attaches to both networks to allow for seamless routing. You can see in below commands that when I create a new Swarm service with published ports and attach it to app overlay network, the underlying container is attached to both appnet and ingress overlay networks and has dual IPs.

External access to Docker containers via Ingress routing mesh

External access to Docker containers via Ingress routing mesh

So there you go. I have taken some liberty to abstract things out but I would be very happy if all my customers had this level of understanding when I start working with them. You can easily extend this setup by adding L7 load balancer and reverse proxy like Traefik, Interlock, etc. That would allow you to do all app routing through reverse proxy and reverse proxy will be the only component exposed through ingress overlay. Docker engine has DNS / LB capability too, where name resolution works for services in same overlay network (try to ping DB container from UI without IP address using serivce-name of DB swarm service) and there is built-in support for load balancing across service replicas via virtual IPs (you can lookup VIP using docker service inspect <service-name>). May be I will cover these aspects in a future post.

That’s all for this post. Did I demystify the docker networking magic for you? Is there anything else I could simplify further. Please let me know your comments.

Mục Lục

Rate this:

Share this:

Like this:

Like

Loading…

![Toni Kroos là ai? [ sự thật về tiểu sử đầy đủ Toni Kroos ]](https://evbn.org/wp-content/uploads/New-Project-6635-1671934592.jpg)